Table of Contents

Table of Contents

We don’t quite know what to do with language models yet. But we have some hunches. To me they seem obviously useful as epistemic rubber ducks 🐥 – as things we can query for fuzzy answers, bounce ideas off, and think through problems with. They can help strengthen our own critical thinking and reasoning abilities in the same way a good debate partner does.

This isn’t the only way they’re useful, but it’s the one I’m most curious about. So I’ve been playing with it.

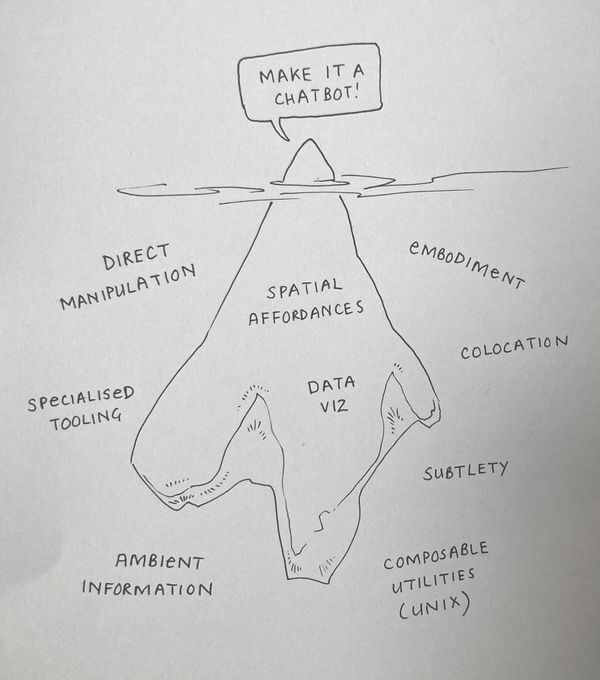

The primary interface everyone and their mother jumps to at this point is the chatbot. We are irreversibly anchored to this text-heavy, turn-based interface paradigm. And sure, it’s a great solution in a lot of cases! It’s flexible, familiar, and easy to implement.

But it’s also the lazy solution. It’s only the obvious tip of the iceberg when it comes to exploring how we might interact with these strange new language model agents we’ve grown inside a neural net.

I won’t turn this into an anti-chatbot-tirade, but I am certainly brewing one. A few of my friends have eloquently written about this so just go read their work in the meantime.

Back to the point: I have a large, sprawling Figma file full of tiny interface sketches trying to explore what kinds of non-chatbot, epistemic-rubber-ducky-interfaces could be helpful for us. Here’s a couple of them.

Daemons

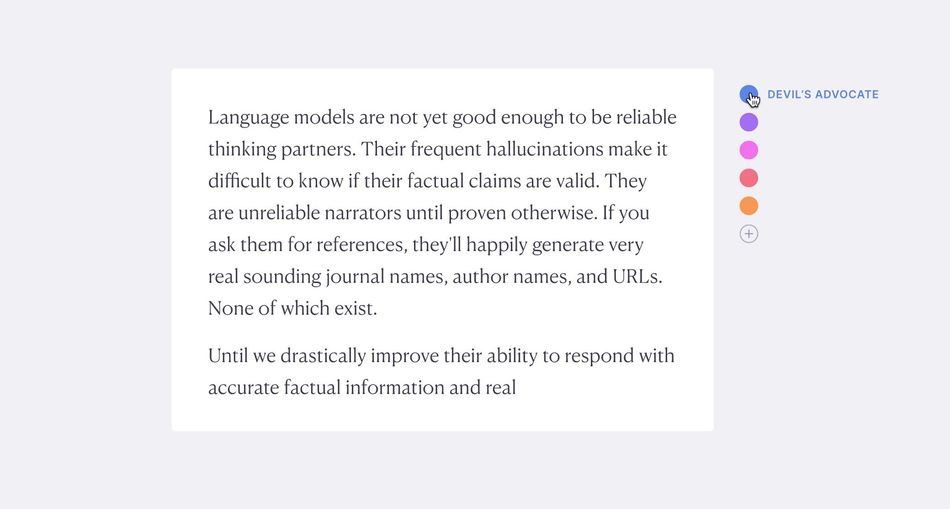

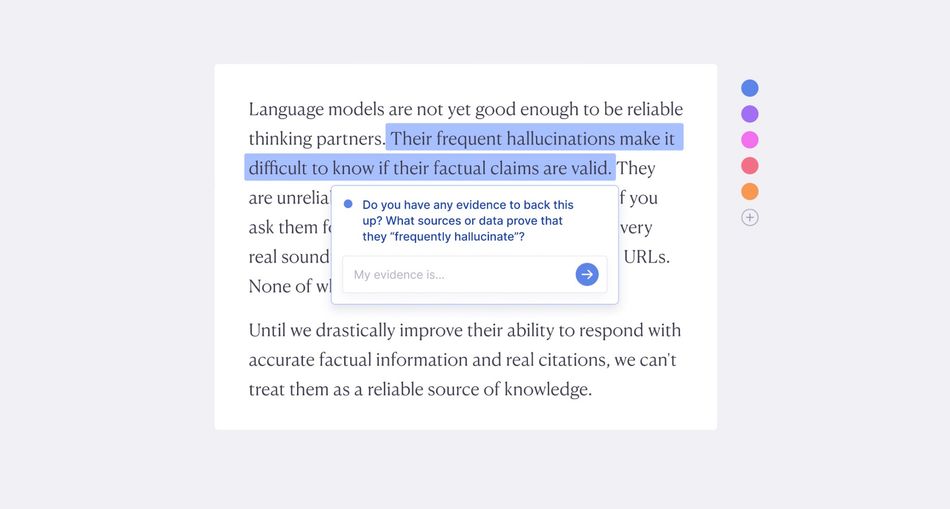

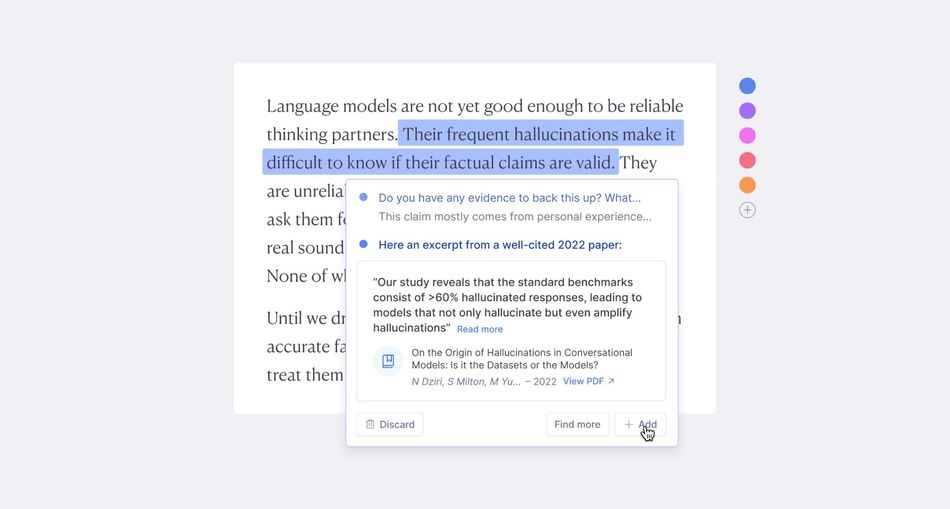

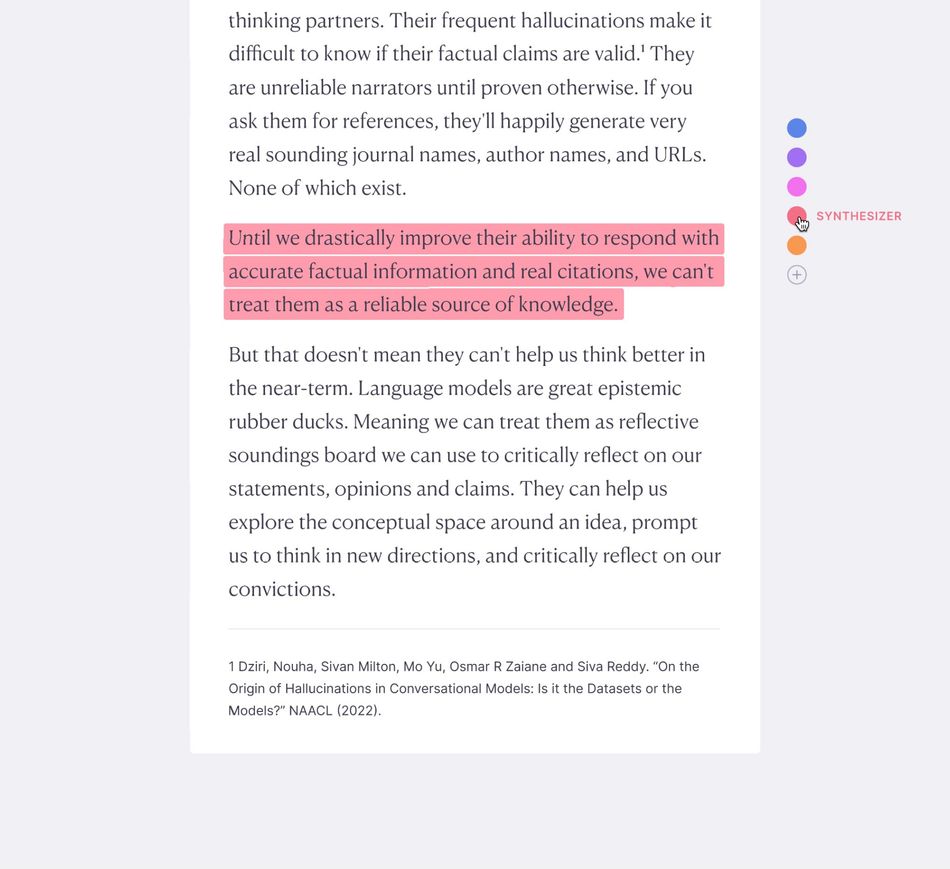

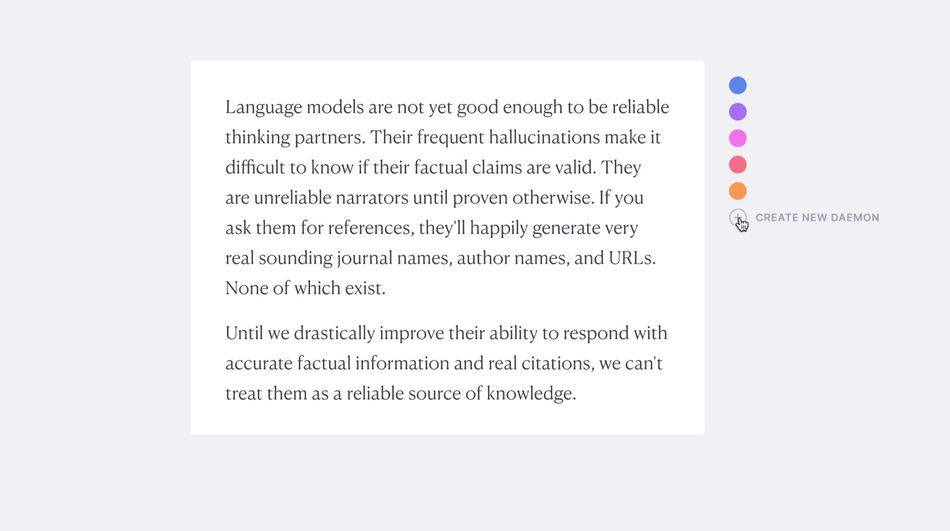

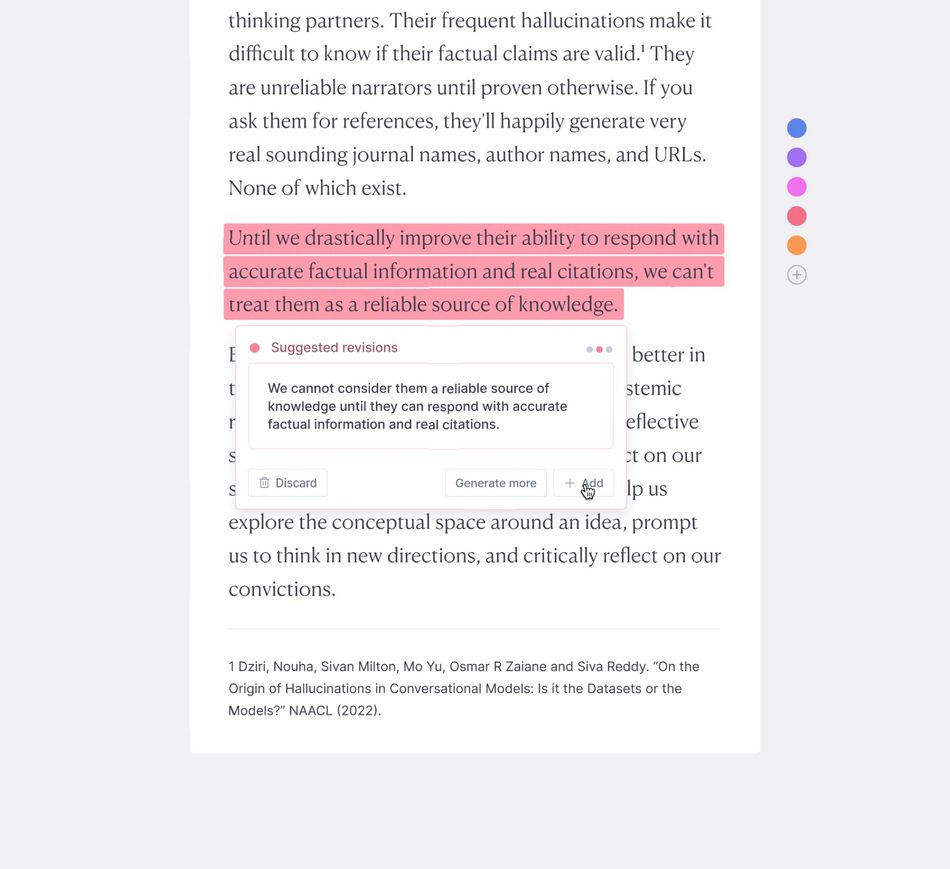

Imagine the environment you’re writing in has a few characters who hang out in the background and suggest ideas to you every now and then.

These daemons have particular personalities – one plays devil’s advocate, one says encouraging things and compliments your writing, one synthesises your ideas into more concise statements, one fetches evidence and research for you, one elaborates on points you haven’t fully explained, etc.

As you write, one of them might highlight a sentence and suggest a revision, or ask you to defend a claim. You can always ignore them if you like and the suggestion will fade.

Here’s a more detailed walkthrough of how this works

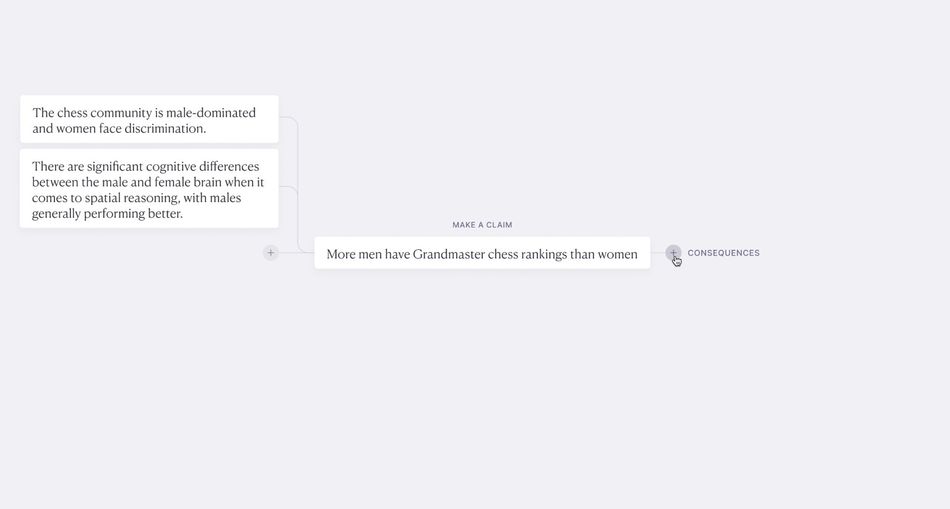

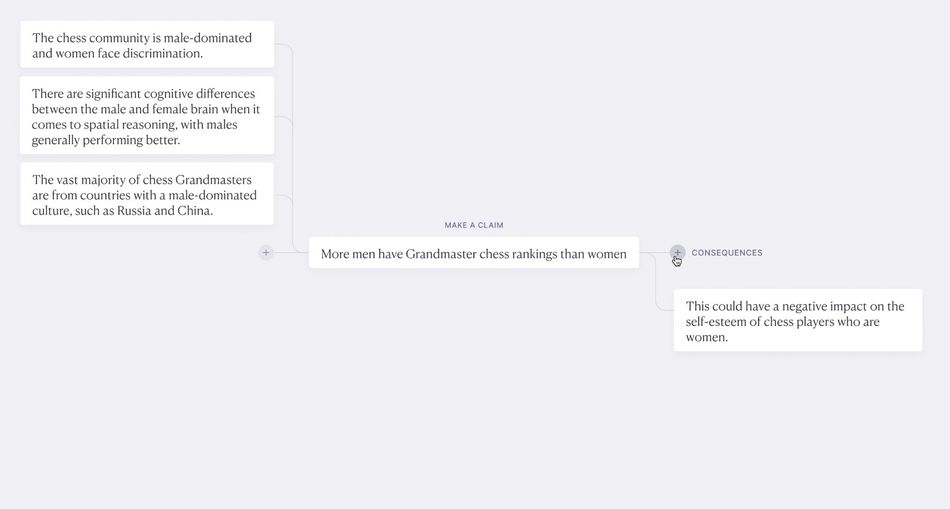

Branches

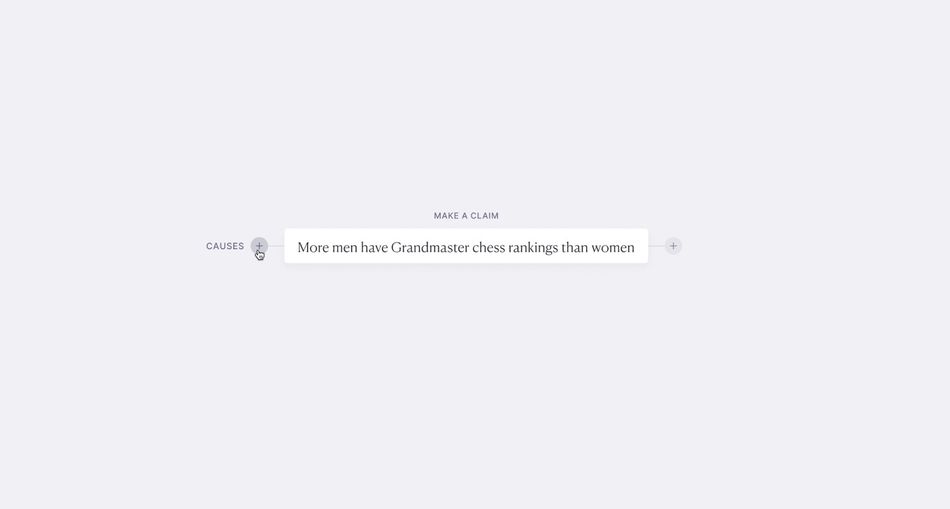

A lot of what we think of as “understanding an issue” often comes down to “What caused this?” and “What are the consequences of this?”. Or put another way, “Why did this happen?” and “What’s likely to happen next?”

We usually get to the bottom of these questions through a mix of research and sitting alone trying to think hard about the issue at hand.

It seems plausible language models would be good helpers in this department. They have plenty of latent knowledge and I’ve found they’re quite good at suggesting reasonable cause-and-effect chains. As long as you double-check its suggestions and don’t take them as gospel.

This concept is centered around exploring these cause/consequence chains

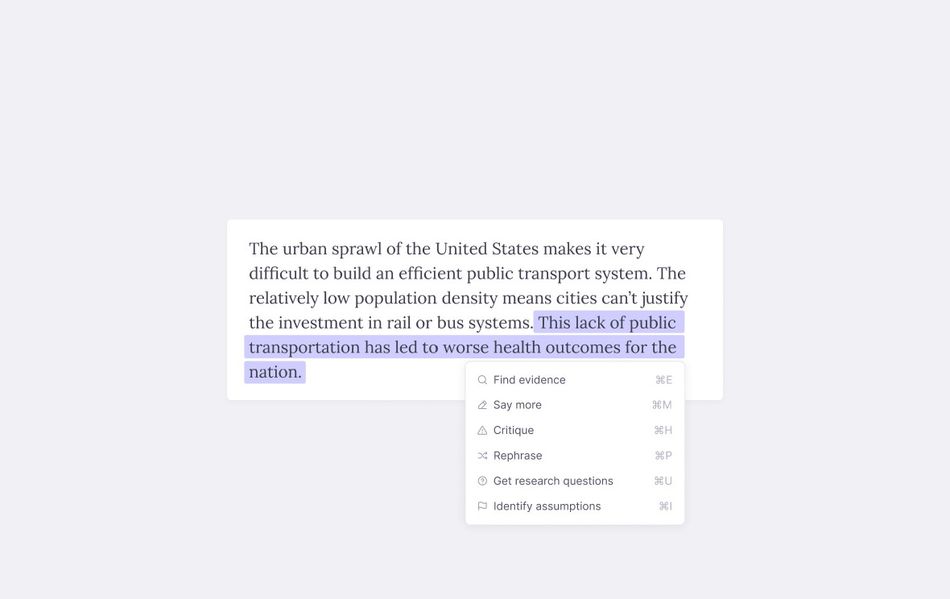

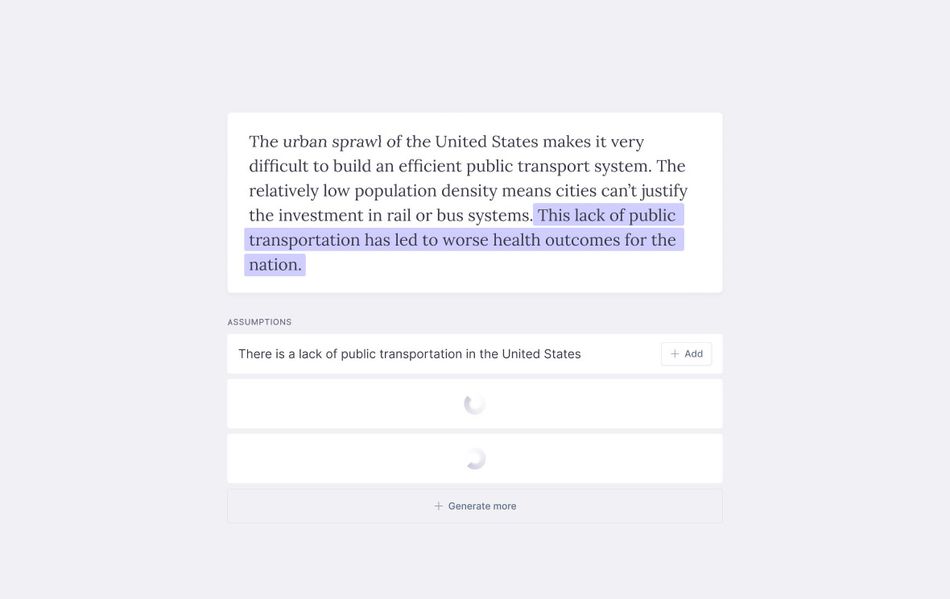

Epi

While I love interfaces with a tightly-scoped and specific purpose, there’s clearly some opportunity for a more general-purpose reasoning assistant with LMs. Specifically for folks doing research and non-fiction writing.

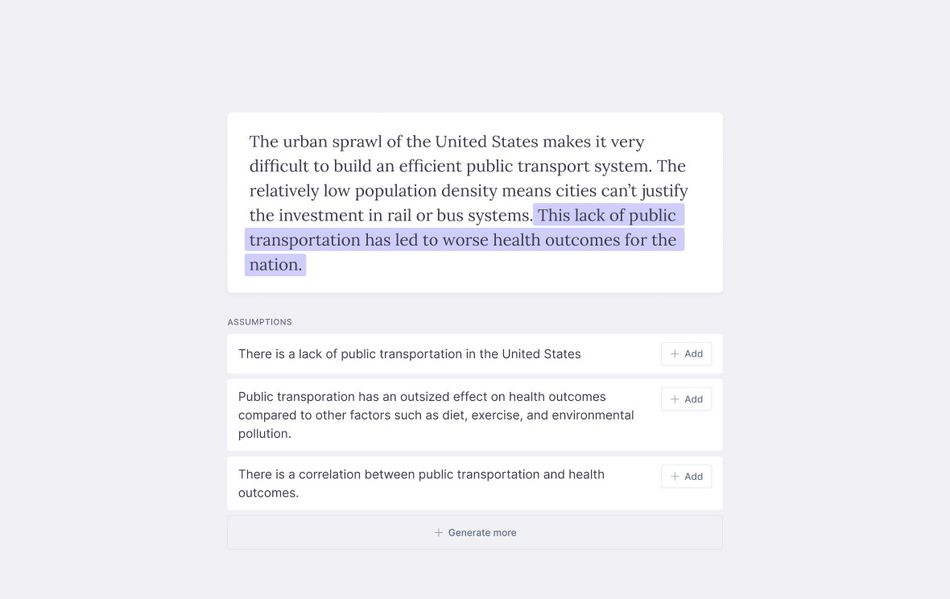

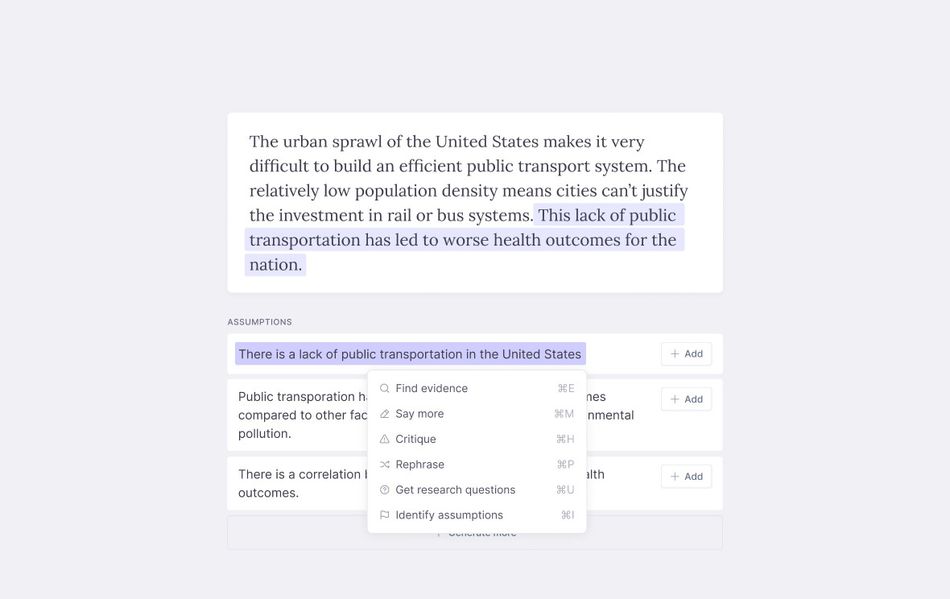

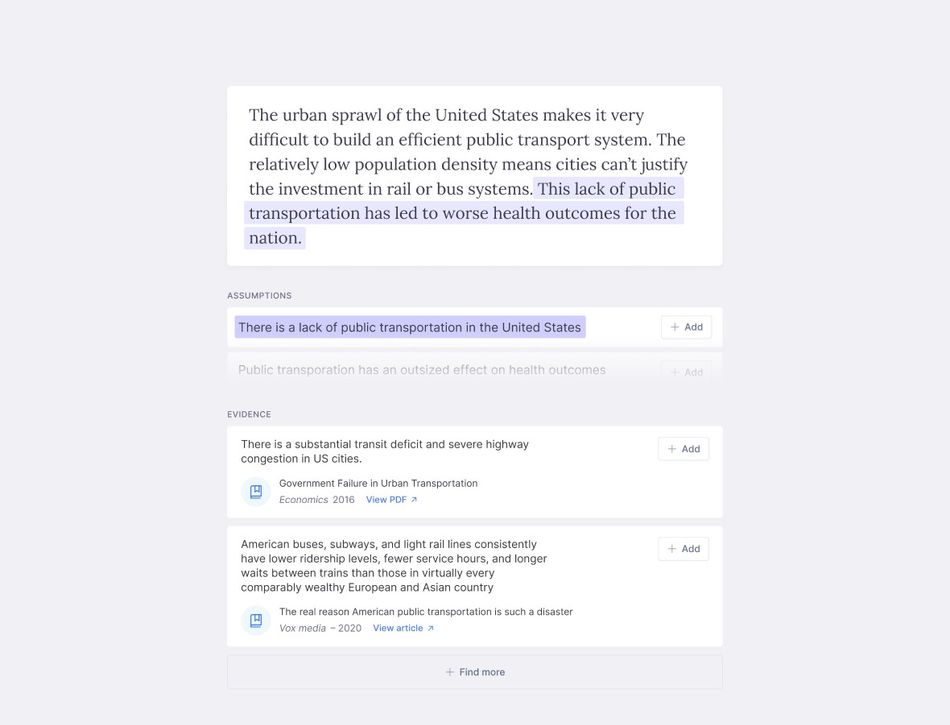

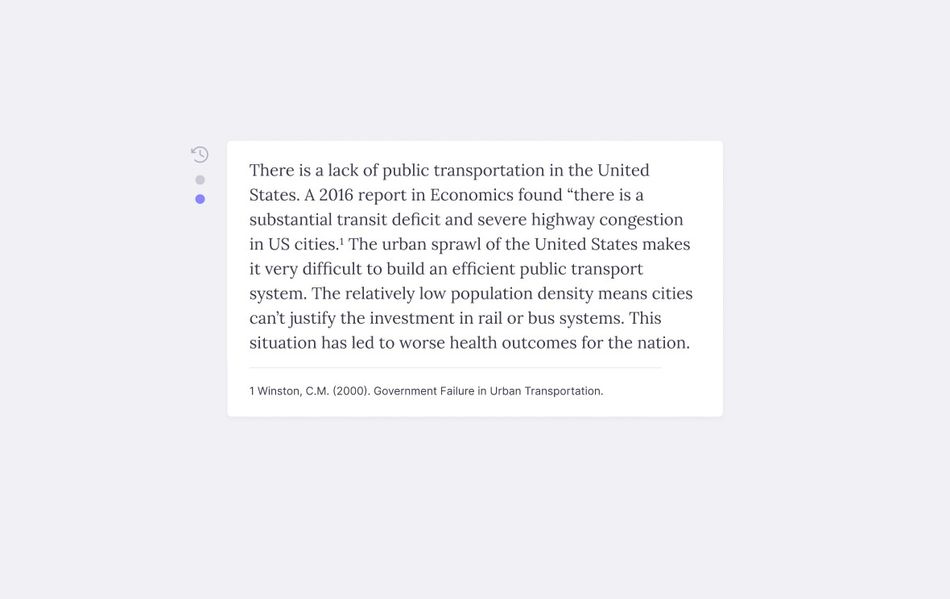

Models can help in a bunch of small ways – rephrasing sentences, offering critiques of ideas, helping to find evidence for claims, generating possible research questions, and pointing out our assumptions.

Epi uses the familiar right-click context menu to make these moves available in a simple writing context.

I’m still building out this collection. More to come.